2015, December

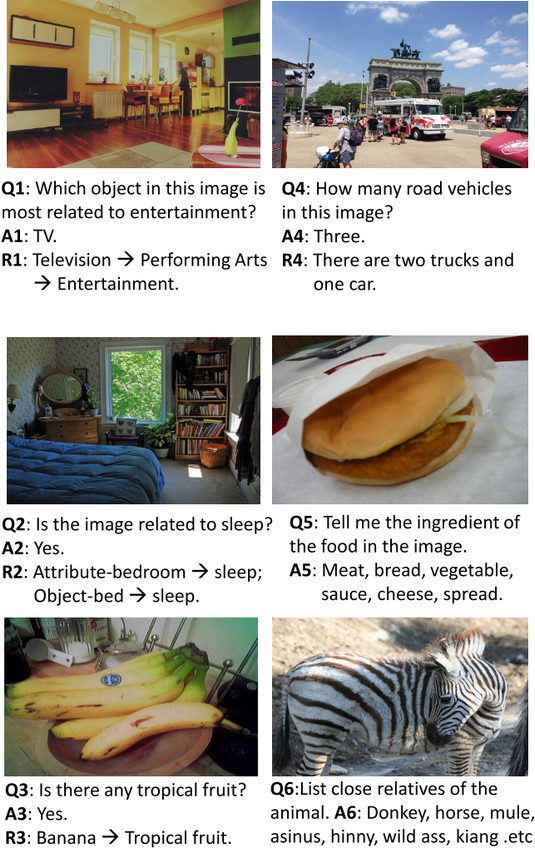

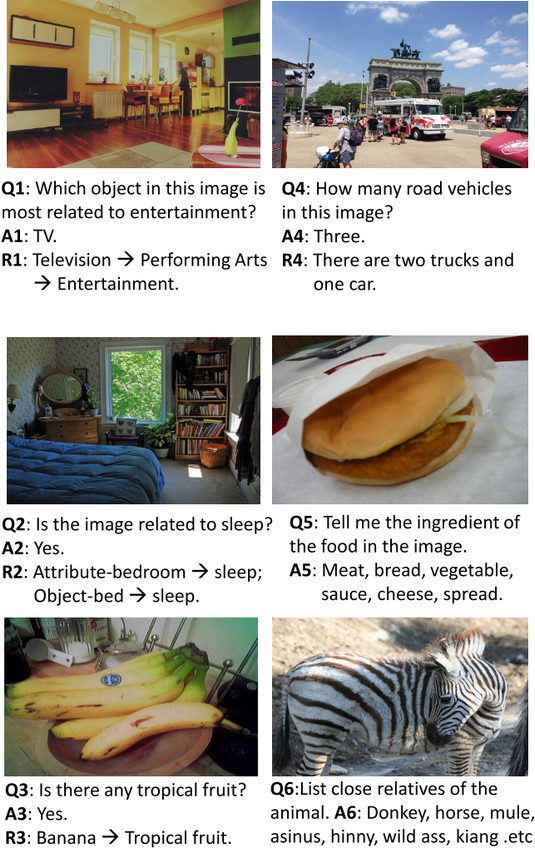

Visual question answering (VQA) is distinct from many problems in Computer Vision because the question to be answered is not determined until run time. In more traditional problems such as segmentation or detection, the single question to be answered by an algorithm is predetermined, and only the image changes. In visual question answering, in contrast, the form that the question will take is unknown, as is the set of operations required to answer it. In this sense it more closely reflects the challenge of general image interpretation.

VQA typically requires processing both visual information (the image) and textual information (the question and answer). One approach to Vision-to-Language problems, such as VQA and image captioning, which interrelate visual and textual information is based on a direct method pioneered in machine language translation. Rather than building a high-level representation of the text, and re-rendering that high-level understanding into a different language, the direct approach develops an encoding of the input text using a Recurrent Neural Network (RNN) and passes it to another RNN for decoding. This is significant because, although this method does not form a high-level representation of the meaning of the text, it performs well.

A paper describing this work is available here, but there are two more about to be released.